Maintaining with an business as fast-moving as AI is a tall order. So till an AI can do it for you, right here’s a useful roundup of current tales on this planet of machine studying, together with notable analysis and experiments we didn’t cowl on their very own.

This week in AI, Google paused its AI chatbot Gemini’s means to generate photographs of individuals after a phase of customers complained about historic inaccuracies. Informed to depict “a Roman legion,” for example, Gemini would present an anachronistic, cartoonish group of racially numerous foot troopers whereas rendering “Zulu warriors” as Black.

It seems that Google — like another AI distributors, together with OpenAI — had carried out clumsy hardcoding beneath the hood to try to “appropriate” for biases in its mannequin. In response to prompts like “present me photographs of solely ladies” or “present me photographs of solely males,” Gemini would refuse, asserting such photographs might “contribute to the exclusion and marginalization of different genders.” Gemini was additionally loath to generate photographs of individuals recognized solely by their race — e.g. “white folks” or “black folks” — out of ostensible concern for “lowering people to their bodily traits.”

Proper wingers have latched on to the bugs as proof of a “woke” agenda being perpetuated by the tech elite. However it doesn’t take Occam’s razor to see the much less nefarious fact: Google, burned by its instruments’ biases earlier than (see: classifying Black males as gorillas, mistaking thermal weapons in Black folks’s fingers as weapons, and so on.), is so determined to keep away from historical past repeating itself that it’s manifesting a much less biased world in its image-generating fashions — nevertheless misguided.

In her best-selling ebook “White Fragility,” anti-racist educator Robin DiAngelo writes about how the erasure of race — “colour blindness,” by one other phrase — contributes to systemic racial energy imbalances relatively than mitigating or assuaging them. By purporting to “not see colour” or reinforcing the notion that merely acknowledging the battle of individuals of different races is enough to label oneself “woke,” folks perpetuate hurt by avoiding any substantive conservation on the subject, DiAngelo says.

Google’s ginger remedy of race-based prompts in Gemini didn’t keep away from the difficulty, per se — however disingenuously tried to hide the worst of the mannequin’s biases. One might argue (and plenty of have) that these biases shouldn’t be ignored or glossed over, however addressed within the broader context of the coaching information from which they come up — i.e. society on the world vast internet.

Sure, the information units used to coach picture mills typically include extra white folks than Black folks, and sure, the photographs of Black folks in these information units reinforce adverse stereotypes. That’s why picture mills sexualize sure ladies of colour, depict white males in positions of authority and customarily favor rich Western views.

Some might argue that there’s no profitable for AI distributors. Whether or not they sort out — or select to not sort out — fashions’ biases, they’ll be criticized. And that’s true. However I posit that, both means, these fashions are missing in rationalization — packaged in a style that minimizes the methods by which their biases manifest.

Had been AI distributors to handle their fashions’ shortcomings head on, in humble and clear language, it’d go loads additional than haphazard makes an attempt at “fixing” what’s basically unfixable bias. All of us have bias, the reality is — and we don’t deal with folks the identical in consequence. Nor do the fashions we’re constructing. And we’d do effectively to acknowledge that.

Listed below are another AI tales of notice from the previous few days:

- Ladies in AI: TechCrunch launched a collection highlighting notable ladies within the area of AI. Learn the checklist right here.

- Steady Diffusion v3: Stability AI has introduced Steady Diffusion 3, the newest and strongest model of the corporate’s image-generating AI mannequin, based mostly on a brand new structure.

- Chrome will get GenAI: Google’s new Gemini-powered instrument in Chrome permits customers to rewrite current textual content on the internet — or generate one thing fully new.

- Blacker than ChatGPT: Artistic advert company McKinney developed a quiz recreation, Are You Blacker than ChatGPT?, to shine a light-weight on AI bias.

- Requires legal guidelines: A whole lot of AI luminaries signed a public letter earlier this week calling for anti-deepfake laws within the U.S.

- Match made in AI: OpenAI has a brand new buyer in Match Group, the proprietor of apps together with Hinge, Tinder and Match, whose staff will use OpenAI’s AI tech to perform work-related duties.

- DeepMind security: DeepMind, Google’s AI analysis division, has shaped a brand new org, AI Security and Alignment, made up of current groups engaged on AI security but in addition broadened to embody new, specialised cohorts of GenAI researchers and engineers.

- Open fashions: Barely per week after launching the newest iteration of its Gemini fashions, Google launched Gemma, a brand new household of light-weight open-weight fashions.

- Home job power: The U.S. Home of Representatives has based a job power on AI that — as Devin writes — seems like a punt after years of indecision that present no signal of ending.

Extra machine learnings

AI fashions appear to know loads, however what do they really know? Properly, the reply is nothing. However in the event you phrase the query barely otherwise… they do appear to have internalized some “meanings” which might be much like what people know. Though no AI really understands what a cat or a canine is, might it have some sense of similarity encoded in its embeddings of these two phrases that’s completely different from, say, cat and bottle? Amazon researchers consider so.

Their analysis in contrast the “trajectories” of comparable however distinct sentences, like “the canine barked on the burglar” and “the burglar induced the canine to bark,” with these of grammatically comparable however completely different sentences, like “a cat sleeps all day” and “a lady jogs all afternoon.” They discovered that those people would discover comparable had been certainly internally handled as extra comparable regardless of being grammatically completely different, and vice versa for the grammatically comparable ones. OK, I really feel like this paragraph was somewhat complicated, however suffice it to say that the meanings encoded in LLMs seem like extra strong and complicated than anticipated, not completely naive.

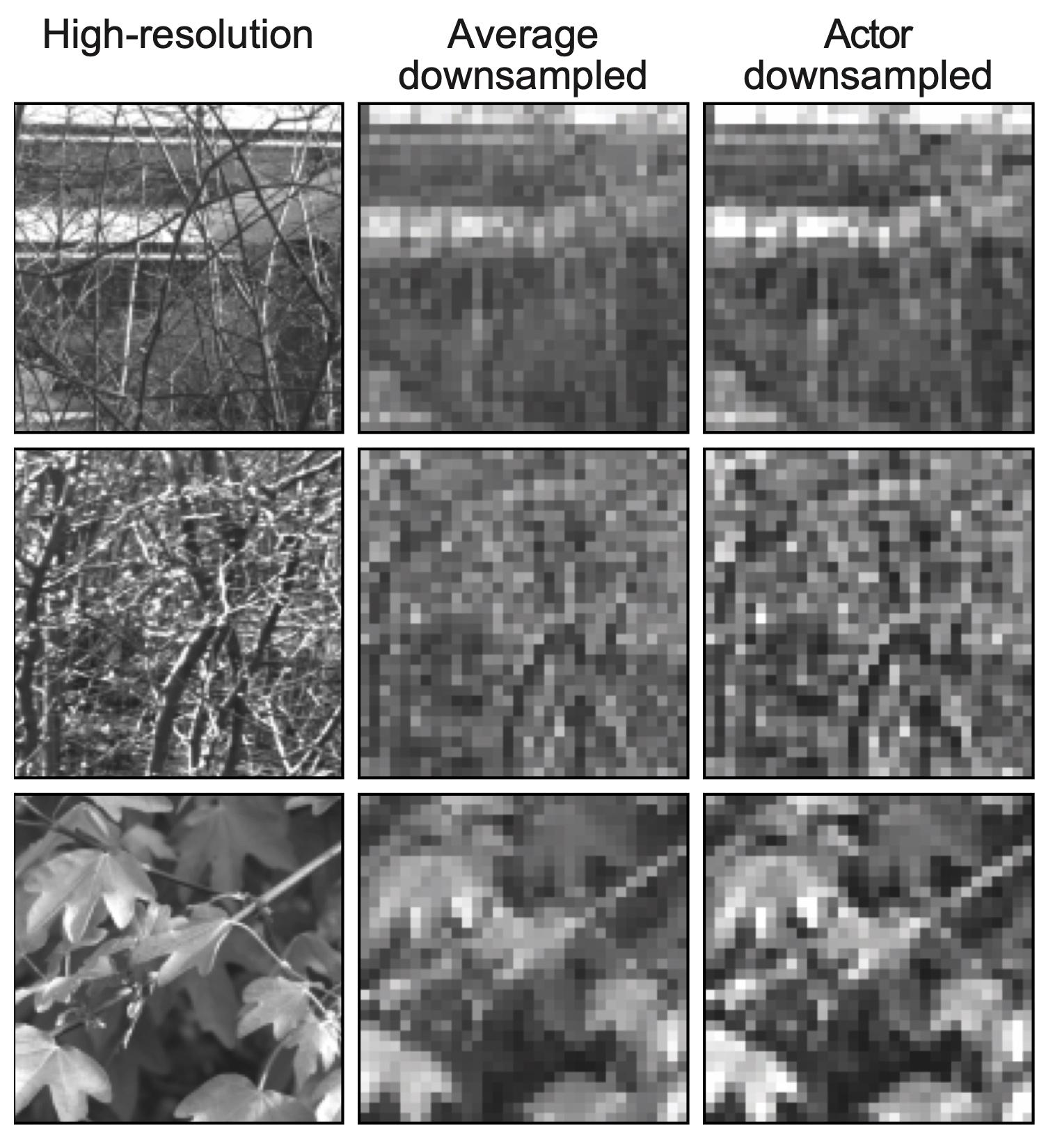

Neural encoding is proving helpful in prosthetic imaginative and prescient, Swiss researchers at EPFL have discovered. Synthetic retinas and different methods of changing elements of the human visible system typically have very restricted decision because of the limitations of microelectrode arrays. So regardless of how detailed the picture is coming in, it needs to be transmitted at a really low constancy. However there are alternative ways of downsampling, and this group discovered that machine studying does an ideal job at it.

Picture Credit: EPFL

“We discovered that if we utilized a learning-based method, we obtained improved outcomes by way of optimized sensory encoding. However extra shocking was that after we used an unconstrained neural community, it realized to imitate facets of retinal processing by itself,” mentioned Diego Ghezzi in a information launch. It does perceptual compression, mainly. They examined it on mouse retinas, so it isn’t simply theoretical.

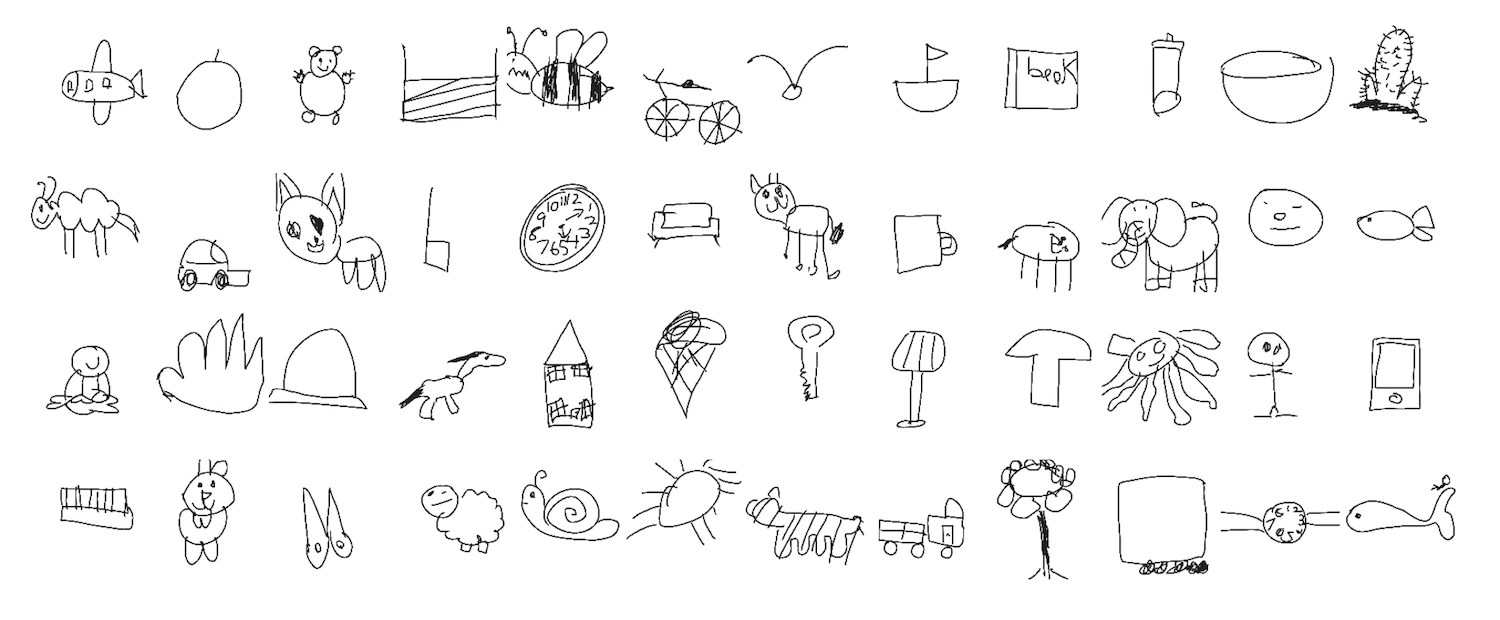

An attention-grabbing software of laptop imaginative and prescient by Stanford researchers hints at a thriller in how youngsters develop their drawing abilities. The group solicited and analyzed 37,000 drawings by children of assorted objects and animals, and likewise (based mostly on children’ responses) how recognizable every drawing was. Apparently, it wasn’t simply the inclusion of signature options like a rabbit’s ears that made drawings extra recognizable by different children.

“The sorts of options that lead drawings from older youngsters to be recognizable don’t appear to be pushed by only a single characteristic that each one the older children study to incorporate of their drawings. It’s one thing rather more complicated that these machine studying techniques are choosing up on,” mentioned lead researcher Judith Fan.

Chemists (additionally at EPFL) discovered that LLMs are additionally surprisingly adept at serving to out with their work after minimal coaching. It’s not simply doing chemistry immediately, however relatively being fine-tuned on a physique of labor that chemists individually can’t probably know all of. As an example, in 1000’s of papers there could also be a couple of hundred statements about whether or not a high-entropy alloy is single or a number of part (you don’t should know what this implies — they do). The system (based mostly on GPT-3) may be skilled on this sort of sure/no query and reply, and shortly is ready to extrapolate from that.

It’s not some enormous advance, simply extra proof that LLMs are a useful gizmo on this sense. “The purpose is that that is as simple as doing a literature search, which works for a lot of chemical issues,” mentioned researcher Berend Smit. “Querying a foundational mannequin would possibly turn into a routine approach to bootstrap a undertaking.”

Final, a phrase of warning from Berkeley researchers, although now that I’m studying the submit once more I see EPFL was concerned with this one too. Go Lausanne! The group discovered that imagery discovered through Google was more likely to implement gender stereotypes for sure jobs and phrases than textual content mentioning the identical factor. And there have been additionally simply far more males current in each circumstances.

Not solely that, however in an experiment, they discovered that individuals who seen photographs relatively than studying textual content when researching a task related these roles with one gender extra reliably, even days later. “This isn’t solely concerning the frequency of gender bias on-line,” mentioned researcher Douglas Guilbeault. “A part of the story right here is that there’s one thing very sticky, very potent about photographs’ illustration of those who textual content simply doesn’t have.”

With stuff just like the Google picture generator range fracas occurring, it’s simple to lose sight of the established and continuously verified indisputable fact that the supply of information for a lot of AI fashions exhibits critical bias, and this bias has an actual impact on folks.